Xoltar

An online notebook by Bryn Keller

Machine learning, drones, and whales: A great combination!

Last June, a simple question changed my life: “Hey Bryn, what do you know about whales?” Of course like most people, my answer was “Not much,” but that marked the beginning of an important project to help track the health of whale populations by using machine learning to analyze video from drones. Parley for the Oceans introduced me and my colleagues Ted Willke and Javier Turek to Dr. Iain Kerr of Ocean Alliance, and we started talking about how machine learning could help make marine biologists’ lives easier and help protect the whales, using the video from Dr. Kerr’s SnotBot drones.

SnotBot drone with Petri dishes, courtesy of Christian Miller

SnotBot

Dr. Kerr started the SnotBot program because in the not-so-distant past, when people wanted to understand the health of a whale, or get its DNA, the only way to do this was to shoot it with a crossbow with a specially prepared bolt with a string on it, that would only go a couple of inches into the (remember, bus-size) body. The bolt would then be reeled in and the sample could be extracted from it. Iain realized there was a better way, that didn’t involve crossbows or Zodiac inflatable rafts: put a Petri dish on a drone, and fly the drone through the blow that whales exhale when they come to the surface. It turns out there are all kinds of interesting things in that exhaled breath condensate (i.e. snot), like DNA, hormones, and microbiomes. Much less stress on the whale, and easier on the humans, too.

Drones and Cameras

These days, drones are equipped with some pretty amazing cameras, and the ones that are built for camera work are very steady in the air, using GPS to hold their position steady, and have camera gimbals that compensate for any tilting of the aircraft. As a result, you can get some excellent video of whales. With cameras that good in the air, we realized we could do two important things using machine learning: we could identify individual whales, and we could do health assessments based on body condition.

Whale Research in Alaska

The good folks at Alaska Whale Foundation shared their database of known humpback whales that they had been studying in the Alaskan waters for years, and Ocean Alliance shared a lot of the video they had collected over the years with drones, and we got to work. In a few short weeks, we built some software prototypes, and then we set off to Alaska with Ocean Alliance, Parley, and the Alaska Whale Foundation, to test the software out.

To get to the whales, we needed to take a plane, like you’d expect. In Alaska, the planes are a little different.

The seaplane

And of course, we had lodgings, that were also different from what you’d expect on a normal business trip…

The Glacier Seal, the boat we lived on for a week. Photo courtesy of Christian Miller

Plus the rental car…

Rental car

It was an amazing experience on many levels.

Image obtained under NMFS #18686

Identification

Identifying individual whales is important for many reasons. In the case of humpback whales in Alaska, there are two populations: one that migrates to Hawaii in the winter, and one that migrates to Mexico in the winter. The Mexico population is much more endangered, so NOAA asks that researchers avoid interacting with the Mexico population if possible. But how is a researcher to know the whale in front of them is from Mexico or from Hawaii? Only if he or she can identify the individual.

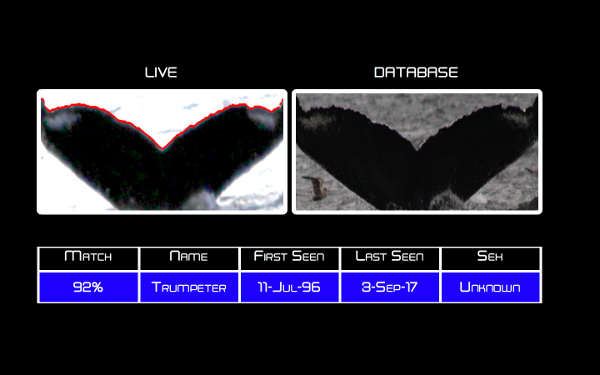

We built a program that could compare a snapshot of a humpback whale’s tail flukes against a database of known whales, using the shape of the ragged trailing edge and the amount of grey or white spotting on the tail, and we were able to identify several whales in Alaska while the drone was still in the air over them, which opens up lots of possibilities. Before this, researchers would usually identify the whales days or months after the fact, if at all.

A discovery

While we were out on the water, listening to a hydrophone, we heard a whale feeding call. It was very distinctive, and Dr. Fred Sharpe from Alaska Whale  Foundation said he thought it sounded familiar. He checked his archival database on his laptop, and found a similar call from 20 years earlier, from a whale

Foundation said he thought it sounded familiar. He checked his archival database on his laptop, and found a similar call from 20 years earlier, from a whale

named Trumpeter. A few minutes later, a whale surfaced, and then we got a picture of its flukes when it dove. We ran it through our software, and we got a match! Sure enough, it was Trumpeter! So we learned something nobody else has known for sure - humpback whale feeding calls remain stable in adults over decades. It wasn’t a question we set out to answer, but it fell into our laps, purely because we were able to identify the whale swimming in front of us.

“Live” flukes under NMFS #18636, “Database” under NMFS #14599

Health Assessment

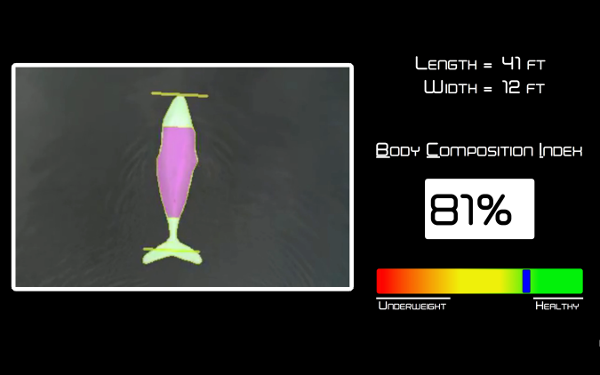

There are lots of things we don’t know about whales. They’re hard to study because they go underwater and they move around a lot. So even a little bit of knowledge about how they’re doing is hard to come by. We built a program that can analyze video data from a drone camera to evaluate how healthy a humpback whale is based on its length and width. Just like other animals, a really thin whale is probably a sick whale, so this simple observational technique alone is already a pretty important indicator of health.

The hard part of this, for a computer, is actually identifying the whale in a video frame. We trained a specialized convolutional neural network to identify whales in difficult conditions like the waters of Alaska, where the color of the whale isn’t much different from the color of the water, and the water tends to be murky.

Once the whale pixels are identified, we calculate the area of the whale that’s used to store energy (basically, everything but the head and the flukes), that’s identified in pink in the picture above.

The technique worked pretty well, though it requires considerable input from an expert user in its present form (e.g. what was the altitude of the drone, what is the sex the whale, what day of the season is it, and in the case of a mother, how long has it been since the calf was born). We’ll be working to make it easier to use, since this kind of health assessment is an important first step before any kind of interaction with a whale. In fact, I’m supervising an intern, Kelly Cates of the University of Alaska at Fairbanks, to help extend the tool.

We presented a poster about this work at the Society for Marine Mammology in October 2017, and just a couple of months later, Iain and I presented together at the Consumer Electronics Show in Las Vegas, which was quite an experience as well.

Photo by Christina Caputo

I just received news that the video that was made about our collaboration was nominated for a Webby award! You can see it here.

I also went down to Mexico back in February with Parley and Ocean Alliance to capture images of blue whales and grey whales, to see about expanding the health assessment tool to those species as well. Unfortunately, there were very few of either compared to the numbers that were seen even last year. One hesitates to draw conclusions, but it does underscore the urgency of getting a better understanding of the overall population health of whales, who are so important to our ecosystem, our oceans, and our own survival.

Important Note: Flying drones is an activity that is heavily regulated in many parts of the world, and you should check your local regulations before flying any drone. It is especially important to understand that in the United States, it is illegal to fly a drone anywhere near a whale without a very special permit from the National Oceanic and Atmospheric Administration.